PLATFORMS

SOLUTIONS

BLOGS

CONTACT

☰

PLATFORMS

SOLUTIONS

BLOGS

CONTACT

☰

This article provides a summary of the primary enterprise data replication patterns used by data engineers when dealing with data integration,

data lake, data hubs, data warehouses and real-time messaging scenarios. Data engineering tasks have become increasingly complex over the years as the

need for interoperability has increased significantly with the proliferation of SaaS systems. As companies continue to leverage

best-in-class, purpose-built SaaS solutions (such as payment automation, AP automation, CRM, ERP and many more), the corporate data

landscape is transforming into a series of data islands that are largely disconnected.

While SaaS platforms are providing the necessary tools for organizations to compete using the latest and greatest business-centric

capabilities, the need to push and pull data from these platforms is increasing due to other priorities, both tactically and

strategically.

To address this level of complexity and to provide a more systematic approach to enterprise data integration that can be used

regardless of the technologies in scope, the data engineering team at Enzo Unified has established

integration patterns that fall in three categories: Stateless, Stateful, and Composite.

These patterns are the result of years of successful implementations and lessons learned across industries, small

and large companies with various enterprise maturity levels, and technologies.

This article describes patterns that are technology agnostic and apply to a variety of platforms both as a source and target, including relational databases, no-sql databases, HTTP/S endpoints, files, and messaging platforms. Unless noted otherwise, these patterns work on all system types.

The patterns provided below provide support for batch, mini-batch (near-time), and real-time data integration processing. While they support virtually any enterprise integration architecture individually or in combination such as the Remote Site Replication architecture, using both mini-batch and real-time streaming data capture together can enable other well-known architectures such as the Lambda Architecture and the Kappa architecture.

Data pipelines are a core component of any data replication topology; every company that moves data,

in or out, implements a pipeline mechanism of some kind, even if it is a manual process or simply using an FTP site

as the transport medium. Most companies however use a technology platform that will provide the necessary features

to implement these patterns.

In some systems, data pipelines may be referred to as data flows, change streams, ETL or ELT pipelines, or

even packages or jobs. These pipelines typically introduce a number of capabilities that vary based on the platform used,

such as data enrichment, filtering, schema management and more. While important, these implementation-specific capabilities

are not listed in the patterns below in order to keep this article focused on enterprise patterns.

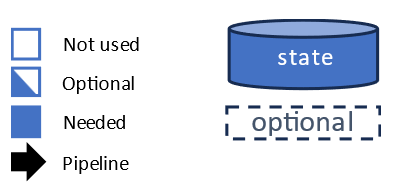

The following convention is used in the diagrams below. A small database icon is added to the diagram to indicate that state management is needed to implement the pattern, such as keeping high watermark values, change capture state, or a copy of the source or target data for comparison.

These patterns deal with pushing data from one system to another without having to worry about whether or not the data was previously sent (a.k.a. state management). In other words, these patterns push data blindly on demand or on schedule.

These patterns deal with pushing data from one system to another along with a tracking mechanism to determine whether the data was previously sent. In other words, these patterns push data selectively using a watermark or a synthetic change capture mechanism, on demand or on schedule.

This section combines two or more patterns previously defined, possibly mixing stateless and stateful patterns to provide the

desired integration outcome.

The following patterns are some of the most commonly used, but please note that this list is not comprehensive.

As the need to integrate disconnected systems continues to grow, establishing clear data integration and replication guidance

is becoming more important for a number of reasons, from purely tactical to more strategic in nature.

To address this need, Enzo Unified has established integration patterns that fall in three categories:

Stateless, Stateful, and Composite. Although the data engineering patterns proposed in this article are technology

agnostic, current technology platforms offer various levels of support of the patterns.

© 2025 - Enzo Unified