PLATFORMS

SOLUTIONS

BLOGS

CONTACT

☰

PLATFORMS

SOLUTIONS

BLOGS

CONTACT

☰

The DataZen HTTP API allows developers to control and program DataZen agents and jobs. The HTTP API

provided in this documentation is the same used by the DataZen Manager application.

In order to successfully access DataZen agents and jobs using the HTTP API, you will need the following:

A valid license key needs to be applied to the DataZen agent against which the HTTP APIs will be executed. The easiest way to apply the license key is to use the DataZen Manager application.

The HTTP API requires the use of Access Keys. A DataZen agent can be configured to restrict access to the API using the following mechanisms:

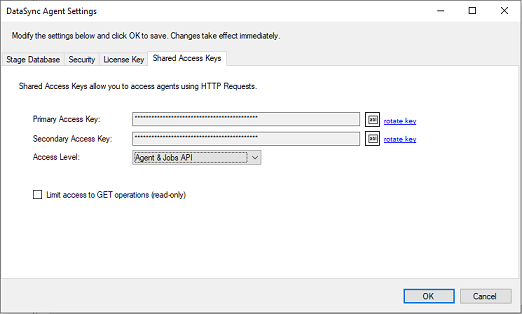

Shared Access Keys are automatically generated when an agent is configured the first time. To view the Shared Access Keys, start the DataZen Manager, select the agent, and choose Configuration -> Agent Settings, and choose the Shared Access Keys tab.

A few HTTP API handlers are not available for security reasons and require the DataZen Manager to administer:

The following HTTP call shows you how to query an agent, listening on port 9559, authenticate using a Shared Access Key (using the Authorization Header) and returns a JSON payload that provides the current version of the agent:

GET http://localhost:9559/version Authorization: SharedKey 123456789426478564563123

{

"URL":"http://localhost:9559",

"Running":true,

"Major":1,

"Minor":0,

"Revision":23770,

"Build":7866,

"JobsRunning":0

}

If an API requires parameters, they are to be added as part of the Query String of the URL. The following example shows how to call the /job/output endpoint:

GET http://localhost:9559/job/output?guid=B427BC0D-57DB-4C88-A847-7B4D07AFBCC5 Authorization: SharedKey 123456789426478564563123

This section covers the HTTP APIs available to manage and monitor DataZen agents.

Parses a license file and returns detailed information about the license provided.

/licenseinfo

Retrieves licensing details given a license file payload.

| Response | |

|

object DataZenLicense show/hide

datazenLicenseTemplate

|

|

POST /licenseinfo

John Miller ACME jmiller@acme.info [SyncSource;SyncTarget;Enterprise] AF2FFFFF62606FFFFF152B007BFFFFF0A3B28F FFFFFF10F1731FFFFFFFFFFCCCBF8ABFFFFFFECBA6605E0FFFFFFFFFF1EEAFFF

{

"DataZenLicense": {

"AcceptSource":true,

"AcceptTarget":true,

"AllowDatabases":true,

"SchedulerAllowed":true,

"DataPipelineAllowed":true,

"DirectJobsOnly":false,

"AllowMessagingHubs":true,

"AllowDriveFiles":true,

"AllowPGPEncryption":true,

"MaxJobs":-1,

"AllowDataCopy":true,

"AllowRBAC":true,

"AllowHeuristics":true,

"Edition":"Enterprise",

"LicenseFile":"...",

"IsValid":false,

"LicenseValidationError":null,

"LicenseValidationResult":4,

"LastValidationMessage":"License is invalid"

}

}

Provides the current status of a DataZen agent.

/status

Retrieves configuration settings and status of a DataZen agent including licensing details.

| Response | |

| string URL | The URL this agent is listening on (ex: http://localhost:9559) |

| bool Running | When true, the agent is in a running status |

| string StageDatabaseServer | The name of the database server where the Stage database is located |

| string StageDatabaseName | The name of the Stage database |

| string StageLogin | The UserId used for the Stage database |

| string LicenseFile | The License File for the agent |

|

object DataZenLicense show/hide

datazenLicenseTemplate

|

|

|

object Version show/hide

agentVersionTemplate

|

|

GET /status

{

"URL":"http://localhost:9559",

"Running":true,

"StageDatabaseServer":"devlap04",

"StageDatabaseName":"EnzoStaging",

"StageLogin":"",

"LicenseFile":"...",

"DataZenLicense": {

"AcceptSource":true,

"AcceptTarget":true,

"AllowDatabases":true,

"SchedulerAllowed":true,

"DataPipelineAllowed":true,

"DirectJobsOnly":false,

"AllowMessagingHubs":true,

"AllowDriveFiles":true,

"AllowPGPEncryption":true,

"MaxJobs":-1,

"AllowDataCopy":true,

"AllowRBAC":true,

"AllowHeuristics":true,

"Edition":"Enterprise",

"LicenseFile":"...",

"IsValid":true,

"LicenseValidationError":null,

"LicenseValidationResult":4,

"LastValidationMessage":null

},

"Version": {

"URL":"http://localhost:9559",

"Running":true,

"Major":1,

"Minor":0,

"Revision":23770,

"Build":7866,

"JobsRunning":0

}

}

Provides the version of an agent.

/version

Retrieves the current version of a DataZen agent.

| Response | |

|

object Version show/hide

agentVersionTemplate

|

|

GET /version

{

"Version": {

"URL":"http://localhost:9559",

"Running":true,

"Major":1,

"Minor":0,

"Revision":23770,

"Build":7866,

"JobsRunning":0

}

}

Manages connection strings stored securely in DataZen.

/connections

Gets available connection strings or the connection string for the key provided. To retrieve connection string metata (without the actual connection string itself) call the /connections/metadata endpoint instead for better performance.

| Query Parameters | |

| string key | The key of the connection string to retrieve (optional) |

| string decrypt | When 1, decrypts the data node of the connection string (optional). By default, connection strings are returned encrypted in the 'data' node. |

| string type | When provided, filters the list with the Connection Type provided (ex: DB) (optional) |

| Response | |

| Key | The unique friendly name of the Central Connection String |

| Data | (always empty) |

| System | The system this connection points to (ex: MySQL, Oracle, AWS S3 Bucket, Google BigQuery, HTTP...) |

| UseCount | The number of jobs that reference this connection string |

| ConnectionType | The type of connection string (HTTP, Drive, DB, Messaging, BigData) |

GET /connections?key=MyAWSS3Connection

[

{

"Key":"MyAWSS3Connection",

"Data": "{\"service\":\"awss3\",\"displayName\":\"AWS S3 Bucket\",\"displayConnectionString\":\"testdata\",\"bucket\":\"testdata\",\"accessKey\":\"...\",\"accessSecret\":\"...\",\"region\":\"us-east-1\"}",

"System":"AWS S3 Bucket",

"UseCount":4,

"ConnectionType":"Drive"

}

]

Retrieves available connection strings information without the actual connection string itself (the 'data' node is always empty).

/connections/metadata

Gets information about all available connection strings or for the key provided, without returning the actual connection string.

| Query Parameters | |

| string key | The key of the connection string to retrieve (optional) |

| Response | |

| Key | The unique friendly name of the Central Connection String |

| Data | (always empty) |

| System | The system this connection points to (ex: MySQL, Oracle, AWS S3 Bucket, Google BigQuery, HTTP...) |

| UseCount | The number of jobs that reference this connection string |

| ConnectionType | The type of connection string (HTTP, Drive, DB, Messaging, BigData) |

GET /connections/metadata

[

{

"Key":"AWSS3",

"Data":"",

"System":"AWS S3 Bucket",

"UseCount":4,

"ConnectionType":"Drive"

},

{

"Key":"localtesttmp",

"Data":"",

"System":"Local Path",

"UseCount":1,

"ConnectionType":"Drive"

},

{

"Key":"devlap04",

"Data":"",

"System":"SQLServer",

"UseCount":3,

"ConnectionType":"DB"

}

]

Changes the status of the agent to "running" and resumes job processing. Any active job that missed its processing window will start.

/start

Allows the DataZen agent to resume processing scheduled jobs.

| Response | |

| OK | Success response code |

POST /start

OK

Changes the status of the agent to "stopped" and stops automatic job processing. Does not cancel jobs that are still running, but prevents future active jobs from starting on schedule.

/stop

Stop job processing.

| Response | |

| OK | Success response code |

POST /stop

OK

Dynamic Jobs allows you to run data movement jobs without first having to create them

in DataZen. Dynamic Jobs only exist in memory in DataZen; their definitions are not stored. However, the execution summary of

a Dynamic Job can be viewed, including its detailed output even after is has been completed. The following options

are available for Dynamic Jobs:

/jobssummary?dynamic=1

/job/stopprocessing

/job/dynamic/executions

/job/dynamic

/job/log?execguid=

/job/output

/job/dynamic /job/dynamic?guid=... /job/dynamic?jobkey=...

Returns the definition of a dynamic job. If guid is provided, the jobkey parameter is ignored. If neither value is provided, all available Dynamic Jobs found in memory are returned. Restarting DataZen clears all Dynamic Jobs from memory.

GET /job/dynamic?guid=a3ff75315a4241d1958e1f9dbd53829f

[

{

"Guid":"a3ff75315a4241d1958e1f9dbd53829f",

"JobKey":"a3ff75315a4241d1958e1f9dbd53829f",

"SqlRead":"EXEC sharepoint._configuse 'default'; SELECT ID, Title, AccountNumber, CompanyId, Created, Modified FROM SharePoint.[list@companies]",

"Path":"C:\\tmp\\datasync_reader",

"UpsertColumns":"ID",

"TimestampCol":null,

"LastTsPointer":null,

"LastTsDelPointer":null,

"ConnectionString":"Data Source=localhost,9550;User Id=sa;password=.....",

"PropagateDelete":false,

"SyncStrategy":"Full",

"CreatedOn":"2022-10-12T18:31:46.2373302Z",

"Status":null,

"Initialize":false,

"Active":true,

"LastRunTime":"2022-10-12T18:31:50.0782741Z",

"CronSchedule":null,

"JsonData":

{

"DirectWriter":"__a3ff75315a4241d1958e1f9dbd53829f"

},

"PipelineDef":null,

"SystemName":"Generic",

"IsDBSource":true,

"IsHttpSource":false,

"IsDriveSource":false,

"IsQueueingSource":false,

"JobType":1,

"IsDirty":false,

"TrackLastTs":false,

"AuditLogEnabled":false,

"IsDirect":true,

"IsCDCSource":false

}

]

/job/dynamic /job/dynamic?resync=1

Executes a job using the definition provided. Dynamic jobs cannot be deleted or updated.

Although the JobKey and Guid parameters are optional when creating a dynamic job, it is best to provide a value

if you intend to query the other endpoints (such as the /log/output endpoint).

Note that the Connection String is required, and can either be the key of an existing connection or a full

connection string.

At a minimum either the JobReader or JobWriter node must be provided; if both are provided, the job is considered a

Direct Job and the writer will start as soon as the reader completes successfully. If only the Job Reader is provided,

a Change Log file will be created and stored in the Path provided if any records are available.

HINT: Use the Export JSON button on a Job Definition in DataZen Manager to obtain a valid

template for creating dynamic jobs.

| Query Parameters | |

| resync | (optional) When 1, runs a full resync of the source system (applies to Job Readers only) |

| recreate | (optional) When 1, attempts to truncate or recreate the target object (applies to Job Writers only) |

| testrun | (optional) When 1, reads a single record from the source system (applies to Job Readers only) |

| Payload | |

| object JobCreation show/hide | |

| Response | |

| OK | Success response code |

POST /job/dynamic

{

"JobReader": {

"SqlRead": "SELECT ID, Title, AccountNumber, CompanyId, Created, Modified FROM SharePoint.[list@companies]",

"Path": "C:\\tmp\\datasync_reader",

"UpsertColumns": "ID",

"ConnectionString": "enzo9550"

},

"JobWriter": {

"UpsertColumns": "ID",

"ConnectionString": "sql2017",

"TargetObject": "[regression].SPCompanies2"

}

}

OK

Returns the history of executions available of a specific job given its unique identifier (guid). Although Dynamic Jobs are only stored in memory by DataZen, their executions are recorded in history and available even after restarting DataZen.

/job/dynamic/executions?guid=...

NOTE: The Unique Identifier of a Dynamic Job can be specifyed when it is started; this can make it easier to track the executions of related dynamic jobs over time. To view all available Dynamic Jobs still in memory, call the /jobssummary endpoint with the dynamic=1 option.

| Query Parameters | |

| string guid | The job guid to query(required) |

| Response | |

| guid | The guid of the dynamic job |

| execGuid | The execution id of the dynamic job |

| lastRunTime | The last run date/time of the dynamic job |

| errors | Number of errors detected |

| warnings | Number of warnings detected |

GET /job/dynamic/executions?guid=a3ff75315a4241d1958e1f9dbd53829f

[

{

"guid":"a3ff75315a4241d1958e1f9dbd53829f",

"execGuid":"a3ff75315a4241d1958e1f9dbd53829f",

"lastRunTime":"2022-10-12T18:31:50.077",

"errors":0,

"warnings":0

}

]

Enables or disables a job. When re-enabled, job processing will resume at the next scheduled interval; if the last interval was missed,

the job will start immediately.

This operation does not support Dynamic Jobs.

/job/enable?guid=123456789&active=0

Enables or disables a job.

| Input Parameters | |

| string guid | The job identifier |

| string active | The flag to disable or enable a job (0:disable, 1:enable) |

| Response | |

| OK | Success response code |

GET /job/enable?guid=123456789&active=0

OK

Creates, updates or deletes a Job Reader, Job Writer, or Direct Job. A Direct Job contains both a reader and writer, and the job is

considered successful when both are completed successfully.

This operation does not support Dynamic Jobs.

Creates a Job Reader, Job Writer, or Direct Job. When updating a DirectJob, you need to provide both the JobReader and the JobWriter.

POST /job

{

"JobReader": {

"Guid": null,

"JobKey": "TEST-JOB-ALLDATABASES",

"SqlRead": "SELECT * FROM sys.databases",

"Path": "C:\\Tmp\\datasync_reader\\",

"UpsertColumns": "database_id",

"TimestampCol": "",

"LastTsPointer": null,

"LastTsDelPointer": null,

"ConnectionString": "sql2017",

"PropagateDelete": false,

"SyncStrategy": "Full",

"CreatedOn": "2022-10-12T19:24:14.3440036Z",

"Status": null,

"Initialize": false,

"Active": true,

"LastRunTime": null,

"CronSchedule": "0 */5 * ? * *",

"JsonData": {

"AuditLogEnabled": true,

"KeepChangedLogFiles": true,

"EncryptionFile": "",

"BypassTopN": false,

"TopNOverride": 0,

"DirectWriter": null,

"ExecutionTimeout": 0,

"BypassSchemaDetection": false,

"DeletedRecordsMethod": 0,

"DeletedRecordsSQL": null,

"CDCTokenDeletedField": "",

"MessagingOptions": null,

"DriveSourceSettings": null,

"Column2TableTxSettings": null,

"HttpSourceSettings": null,

"BigDataSourceSettings": null,

"JobTriggers": [],

"JobTriggersEnabled": false,

"CDCSettings": null,

"DynamicParameter": null

},

"SystemName": null,

"PipelineDef": []

},

"JobWriter": null

}

OK

/job

Updates a Job Reader, Job Writer, or Direct Job. When updating a DirectJob, you need to provide both the JobReader and the JobWriter. The Guid nodes must be provided.

PUT /job

{

"JobReader": {

"Guid": a3ff75315a4241d1958e1f9dbd53829f,

"JobKey": "TEST-JOB-ALLDATABASES",

"SqlRead": "SELECT * FROM sys.databases",

"Path": "C:\\Tmp\\datasync_reader\\",

"UpsertColumns": "database_id",

"TimestampCol": "",

"LastTsPointer": null,

"LastTsDelPointer": null,

"ConnectionString": "sql2017",

"PropagateDelete": false,

"SyncStrategy": "Full",

"CreatedOn": "2022-10-12T19:24:14.3440036Z",

"Status": null,

"Initialize": false,

"Active": true,

"LastRunTime": null,

"CronSchedule": "0 */5 * ? * *",

"JsonData": {

"AuditLogEnabled": true,

"KeepChangedLogFiles": true,

"EncryptionFile": "",

"BypassTopN": false,

"TopNOverride": 0,

"DirectWriter": null,

"ExecutionTimeout": 0,

"BypassSchemaDetection": false,

"DeletedRecordsMethod": 0,

"DeletedRecordsSQL": null,

"CDCTokenDeletedField": "",

"MessagingOptions": null,

"DriveSourceSettings": null,

"Column2TableTxSettings": null,

"HttpSourceSettings": null,

"BigDataSourceSettings": null,

"JobTriggers": [],

"JobTriggersEnabled": false,

"CDCSettings": null,

"DynamicParameter": null

},

"SystemName": null,

"PipelineDef": []

},

"JobWriter": null

}

OK

Deletes a Job Reader, Job Writer, or Direct Job. When deleting a DirectJob, only the JobReader identifier is needed. This operation does not delete the execution log of the job.

| Query Parameters | |

| string guid | The job identifier |

| Response | |

| OK | Success response code |

DELETE /job?guid=a3ff75315a4241d1958e1f9dbd53829f

OK

Provides the latest, in-memory, log output for of given job. The output is limited to the last available 200 entries. For job-specific output, see job/log.

/job/output?guid=123456789

Gets the last 200 debug messages of a job given its job identifier.

| Input Parameters | |||||||||||||

| string guid | The job identifier | ||||||||||||

| Response | |||||||||||||

object[] OutputMessage show/hide

|

|||||||||||||

GET /job/output?guid=2b76c83008174135849ab0c7619a6d9e

[

{

"Sequence":1,"

Guid":"2b76c83008174135849ab0c7619a6d9e",

"DateAdded":"2021-07-16T14:16:49.5078227Z",

"Message":"Reader starting...",

"Level":1,

"ExecGuid": "b423b7621b2a4da1968c5372612000a6"

},

{

"Sequence":2,"

Guid":"2b76c83008174135849ab0c7619a6d9e",

"DateAdded":"2021-07-16T14:16:49.6019398Z",

"Message":"Strategy: full",

"Level":1,

"ExecGuid": "b423b7621b2a4da1968c5372612000a6"

},

{

"Sequence":3,

"Guid":"2b76c83008174135849ab0c7619a6d9e",

"DateAdded":"2021-07-16T14:16:49.7131806Z",

"Message":"Schema extracted successfully",

"Level":1,

"ExecGuid": "b423b7621b2a4da1968c5372612000a6"

}

]

Provides the execution history of a job. When the job is a Direct Job (with both a reader and writer), the response object

contains a separate entry for each job, and the RecordsDirectWritten and RecordsDirectDeleted attributes on the Job Reader represent the

Direct Writer's changes.

This operation does not support Dynamic Jobs.

/job/history?guid=123456789 /job/history?guid=123456789&limit=500&listenerType=writer&filter=all

Gets the execution history of a job.

| Query Parameters | |

| string guid | The job identifier |

| limit | Maximum number of rows to return (default: 100) |

| listenerType | The listener type (reader, writer) (default: reader). Direct Jobs are considered reader jobs. |

| filter | When empty or not provided (default), only returns execution history that identified or processed records, or that contain errors. When the filter is set to all, includes execution history that did not have any records identified or processed. |

| Response | |

|

object[] SyncStatus show/hide

statusTemplate

|

|

GET /job/history?guid=0c843d0f69dd4b449d3c4c43d47bf7c7

[

{

"Guid": "0c843d0f69dd4b449d3c4c43d47bf7c7",

"ListenerType": "reader",

"ElapsedSQLTime": 0.0361273,

"ElapsedMapRecudeTime": 0.1177181,

"RecordCount": 5,

"RecordsAvailable": 5,

"DeleteCount": 0,

"LastTsPointer": null,

"LastDelTsPointer": null,

"ErrorMessage": "",

"Success": true,

"JobKey": "SQL-2-SQLTest",

"ExecutionId": "1660662334171",

"PackageFile": "C:\\tmp\\datasync_reader\\SQL-2-SQLTest_1660662334171.eds",

"CreatedOn": "2022-08-16T15:05:32.94",

"IsConsumerDeamonError": false,

"RecordsDirectWritten": 5,

"RecordsDirectDeleted": 0,

"ExecGuid": "b5b37cd4190d4e97a01d33e00c82fa74",

"ExecRunTime": 4.83,

"ExecStartTime": "2022-08-16T15:05:32.94",

"ExecStopTime": "2022-08-16T15:05:36.77"

},

{

"Guid": "c920da3f3cac4bb99f8cde2f8b2f231c",

"ListenerType": "writer",

"ElapsedSQLTime": 0.1596194,

"ElapsedMapRecudeTime": 0.0,

"RecordCount": 197,

"RecordsAvailable": 197,

"DeleteCount": 0,

"LastTsPointer": null,

"LastDelTsPointer": null,

"ErrorMessage": "",

"Success": true,

"JobKey": "__SQL-2-SQLTest",

"ExecutionId": "1647274246435",

"PackageFile": "C:\\tmp\\datasync_reader\\SQL-2-SQLTest_1647274246435.eds",

"CreatedOn": "2022-03-14T16:10:46.633",

"IsConsumerDeamonError": false,

"RecordsDirectWritten": 0,

"RecordsDirectDeleted": 0,

"ExecGuid": null,

"ExecRunTime": -1.0,

"ExecStartTime": "0001-01-01T00:00:00",

"ExecStopTime": "0001-01-01T00:00:00"

}

]

Provides the configuration settings of a job, or all jobs, including any direct writer settings and last job status.

This operation does not support Dynamic Jobs.

/jobs/info /jobs/info?guid=123456789

Provides the configuration settings of a job.

| Query Parameters | |

| string guid | The job identifier (optional) |

| Response | |

|

object[] JobInfoPackage show/hide

jobInfoPackageTemplate

|

|

GET /jobs/info?guid=0c843d0f69dd4b449d3c4c43d47bf7c7

[

{

"Guid": "5945167f7aef40aa9c8a7873432d2ebb",

"JobType": 1,

"JobKey": "FILMS-AzEventHub",

"Active": true,

"JobReader": {

"Guid": "5945167f7aef40aa9c8a7873432d2ebb",

"JobKey": "FILMS-AzEventHub",

"SqlRead": "SELECT * FROM Film LIMIT 10",

"Path": "C:\\tmp\\datasync_reader",

"UpsertColumns": "film_id",

"TimestampCol": "",

"LastTsPointer": "",

"LastTsDelPointer": "",

"ConnectionString": "mysql-sakila",

"PropagateDelete": false,

"SyncStrategy": "Full",

"CreatedOn": "2021-10-19T18:51:12",

"Status": "ready",

"Initialize": false,

"Active": true,

"LastRunTime": "2021-11-11T18:58:14",

"CronSchedule": "",

"JsonData": {

"AuditLogEnabled": true,

"EncryptionFile": "",

"BypassTopN": true,

"TopNOverride": 0,

"DirectWriter": "__FILMS-AzEventHub",

"ExecutionTimeout": 0,

"BypassSchemaDetection": true,

"DeletedRecordsMethod": 0,

"DeletedRecordsSQL": null,

"CDCTokenDeletedField": "",

"DriveFileFormat": "",

"DriveFilePattern": "",

"DriveSchemaFile": "",

"DriveFileSelectedColumns": "",

"DriveUpdatedFilesOnly": false,

"DriveIncludeSubfolders": false,

"DriveFolderColumnName": "",

"DriveAddFolderColumn": false,

"Column2TableTxSettings": null

},

"PipelineDef": [],

"SystemName": "MySQL",

"IsDBSource": true,

"IsHttpSource": false,

"IsDriveSource": false,

"IsQueueingSource": false,

"JobType": 1,

"IsDirty": false,

"TrackLastTs": false,

"AuditLogEnabled": true,

"IsDirect": true

},

"JobWriter": {

"Guid": "6e3c31f6d2d94efe96283bcc30195b44",

"JobKey": "__FILMS-AzEventHub",

"Path": "C:\\tmp\\datasync_reader",

"UpsertColumns": "film_id",

"ConnectionString": "datazenhub",

"PropagateDelete": false,

"SourceJobKey": "FILMS-AzEventHub",

"LastExecutionId": "1636657095008",

"CreatedOn": "2021-10-19T18:51:12",

"Status": "ready",

"Active": true,

"LastRunTime": "2021-11-11T18:58:15",

"CronSchedule": "",

"JsonData": null,

"TargetSystem": "MESSAGING",

"TargetObject": "",

"InitialExecutionId": "",

"UpsertScript": "{\"film_id\": {{film_id}}, \n\"title\": \"{{title}}\", \n\"description\": \"{{description}}\", \n\"release_year\": {{release_year}}, \n\"language_id\": {{language_id}}, \n\"original_language_id\": {{original_language_id}}, \n\"rental_duration\": {{rental_duration}}, \n\"rental_rate\": {{rental_rate}}, \n\"length\": {{length}}, \n\"replacement_cost\": {{replacement_cost}}, \n\"rating\": \"{{rating}}\", \n\"special_features\": \"{{special_features}}\", \n\"last_update\": \"{{last_update}}\"}",

"DeleteScript": "",

"LastSchemaHash": "",

"Options": {

"AutoRecreate": false,

"ExecTimeout": 0,

"BatchCount": 1000,

"MessagingUpsertSettings": {

"SystemType": "azeventhub",

"SystemTypeDisplay": "Azure Event Hub",

"EventHubName": "datazen1"

},

"MessagingDeleteSettings": {

"SystemType": "azeventhub",

"SystemTypeDisplay": "Azure Event Hub",

"EventHubName": ""

}

},

"SystemName": "Generic",

"PipelineDef": null,

"JobType": 2,

"IsDirty": false,

"IsDirect": false,

"AuditLogEnabled": false

},

"LastStatus": {

"Guid": "5945167f7aef40aa9c8a7873432d2ebb",

"ListenerType": "reader",

"ElapsedSQLTime": 0.0465737,

"ElapsedMapRecudeTime": 0.0006172,

"RecordCount": 1,

"RecordsAvailable": 1,

"DeleteCount": 0,

"ErrorMessage": "",

"Success": true,

"JobKey": "FILMS-AzEventHub",

"ExecutionId": "1636657095008",

"PackageFile": "C:\\tmp\\datasync_reader\\FILMS-AzEventHub_1636657095008.eds",

"CreatedOn": "2021-11-11T18:58:14",

"IsConsumerDeamonError": false,

"RecordsDirectWritten": 1,

"RecordsDirectDeleted": 0

},

"IsDirectMode": true,

"DirectLastStatus": {

"Guid": "6e3c31f6d2d94efe96283bcc30195b44",

"ListenerType": "writer",

"ElapsedSQLTime": 0.7656911,

"ElapsedMapRecudeTime": 0.0,

"RecordCount": 1,

"RecordsAvailable": 1,

"DeleteCount": 0,

"ErrorMessage": "",

"Success": true,

"JobKey": "__FILMS-AzEventHub",

"ExecutionId": "1636657095008",

"PackageFile": "C:\\tmp\\datasync_reader\\FILMS-AzEventHub_1636657095008.eds",

"CreatedOn": "2021-11-11T18:58:15",

"IsConsumerDeamonError": false,

"RecordsDirectWritten": 0,

"RecordsDirectDeleted": 0

}

}

]

GET /jobs/info?guid=c920da3f3cac4bb99f8cde2f8b2f231c

[

{

"Guid": "fd87f7b599084360a94b0d5fba2e5c5c",

"JobType": 1,

"JobKey": "Rabbit-Kafka",

"Active": false,

"JobReader": {

"Guid": "fd87f7b599084360a94b0d5fba2e5c5c",

"JobKey": "Rabbit-Kafka",

"SqlRead": "",

"Path": "C:\\tmp\\datasync_reader\\MsgConsumers",

"UpsertColumns": "",

"TimestampCol": "",

"LastTsPointer": "",

"LastTsDelPointer": "",

"ConnectionString": "rabbitmq1",

"PropagateDelete": false,

"SyncStrategy": "Full",

"CreatedOn": "2021-10-11T17:57:58",

"Status": "ready",

"Initialize": false,

"Active": false,

"LastRunTime": "2021-12-09T20:49:29",

"CronSchedule": "",

"JsonData": {

"AuditLogEnabled": true,

"BypassSchemaDetection": true,

"DirectWriter": "__Rabbit-Kafka",

"IsQueuingSource": true,

"MessagingOptions": {

"MessageProcessing": 0,

"MessagingColumns": "",

"MessagingFlushTTL": 1,

"RootPath": "",

"MessagingContentColumn": "_raw",

"MessagingBatchEnabled": true,

"MessagingBatchCount": 20,

"MessagingSampleMsg": "{\"film_id\": 1, \n\"title\": \"ACADEMY DINOSAUR\", \n\"description\": \"A Epic Drama of a Feminist And a Mad Scientist who must Battle a Teacher in The Canadian Rockies\", \n\"release_year\": 2006, \n\"language_id\": 1, \n\"original_language_id\": null, \n\"rental_duration\": 6, \n\"rental_rate\": 0.99, \n\"length\": 86, \n\"replacement_cost\": 20.99, \n\"rating\": \"PG\", \n\"special_features\": \"Deleted Scenes,Behind the Scenes\", \n\"last_update\": \"2006-02-15T05:03:42.0000000\"}",

"QueueNameOverride": "ttt",

"MessagingGroupName": null,

"PartitionKeys": null,

"IncludeMetadata": true,

"IncludeHeaders": true,

"MetadataFields": "",

"HeaderFields": ""

}

},

"PipelineDef": [

{

"ColumnName": "Engine",

"DataType": "String",

"ExpressionType": "SQL Expression (SQL Only)",

"SQLExpression": "'DATAZEN'",

"MaxLength": 0,

"IgnoreIfExists": false,

"Name": "DataDynamicColumn",

"Disabled": false

},

{

"Filter": "\\\"film_id\\\".+\\b\\d+.+",

"Column": "0",

"KeepRowOnConvertionError": false,

"KeepRowOnNullOrEmpty": false,

"RegexIgnoreCase": true,

"RegexSingleLine": true,

"FilterType": 3,

"Name": "DataFilter",

"Disabled": false

}

],

"SystemName": "RabbitMQ",

"IsDBSource": false,

"IsHttpSource": false,

"IsDriveSource": false,

"IsQueueingSource": true,

"JobType": 1,

"IsDirty": false,

"TrackLastTs": false,

"AuditLogEnabled": true,

"IsDirect": true

},

"JobWriter": {

"Guid": "9dce102385804200ac3ff1e015e8cd9a",

"JobKey": "__Rabbit-Kafka",

"Path": "C:\\tmp\\datasync_reader\\MsgConsumers",

"UpsertColumns": "",

"ConnectionString": "kafka-cloud",

"PropagateDelete": false,

"SourceJobKey": "Rabbit-Kafka",

"LastExecutionId": "1636649966604",

"CreatedOn": "2021-10-11T17:57:58",

"Status": "ready",

"Active": true,

"LastRunTime": "2021-12-09T20:49:30",

"CronSchedule": "",

"JsonData": null,

"TargetSystem": "MESSAGING",

"TargetObject": "",

"InitialExecutionId": "",

"UpsertScript": "",

"DeleteScript": "",

"LastSchemaHash": "",

"Options": {

"AutoRecreate": false,

"ExecTimeout": 0,

"BatchCount": 1000,

"MessagingUpsertSettings": {

"SystemType": "kafka",

"SystemTypeDisplay": "Kafka",

"TopicName": "",

"FieldKey": null,

"KeyFieldSeparator": "",

"Headers": null

},

"MessagingDeleteSettings": null,

"IsPassthroughMessaging": true,

"ErrorHandling": {

"ActionOnFail": 0,

"RetryCount": 0,

"RetryTTLSeconds": 0,

"RetryExponential": false,

"DeadLetterQueuePath": ""

}

},

"SystemName": "Generic",

"PipelineDef": null,

"JobType": 2,

"IsDirty": false,

"IsDirect": false,

"AuditLogEnabled": false

},

"LastStatus": {

"Guid": "fd87f7b599084360a94b0d5fba2e5c5c",

"ListenerType": "reader",

"ElapsedSQLTime": 0.0,

"ElapsedMapRecudeTime": 0.0467424,

"RecordCount": 1,

"RecordsAvailable": 1,

"DeleteCount": 0,

"ErrorMessage": "",

"Success": true,

"JobKey": "Rabbit-Kafka",

"ExecutionId": "1636649966604",

"PackageFile": "C:\\tmp\\datasync_reader\\MsgConsumers\\Rabbit-Kafka_1636649966604.eds",

"CreatedOn": "2021-11-11T16:59:26",

"IsConsumerDeamonError": false,

"RecordsDirectWritten": 1,

"RecordsDirectDeleted": 0

},

"IsDirectMode": true,

"DirectLastStatus": {

"Guid": "9dce102385804200ac3ff1e015e8cd9a",

"ListenerType": "writer",

"ElapsedSQLTime": 1.1952159,

"ElapsedMapRecudeTime": 0.0,

"RecordCount": 1,

"RecordsAvailable": 1,

"DeleteCount": 0,

"ErrorMessage": "",

"Success": true,

"JobKey": "__Rabbit-Kafka",

"ExecutionId": "1636649966604",

"PackageFile": "C:\\tmp\\datasync_reader\\MsgConsumers\\Rabbit-Kafka_1636649966604.eds",

"CreatedOn": "2021-11-11T16:59:26",

"IsConsumerDeamonError": false,

"RecordsDirectWritten": 0,

"RecordsDirectDeleted": 0

}

}

]

Provides access to a job execution output log. A job can be executed multiple times; however, each execution is assigned a unique

identifier. This endpoint provides access to a specific job execution.

/job/log?execguid=123456789

Returns the execution log of a job given its execution guid. An execution guid is assigned to a job when it start running. When a Direct job is executed, both the reader and writer share the same execution guid.

| Query Parameters | |||||||||||||

| string execguid | The job execution guid (required) | ||||||||||||

| Response | |||||||||||||

object[] OutputMessage show/hide

|

|||||||||||||

GET /job/log?execguid=c920da3f3cac4bb99f8cde2f8b2f231c

[

{

"Sequence": 1,

"Guid": "5945167f7aef40aa9c8a7873432d2ebb",

"DateAdded": "2021-10-19T20:41:04",

"Message": "Reader starting...",

"Level": 0,

"ExecGuid": "c920da3f3cac4bb99f8cde2f8b2f231c"

},

{

"Sequence": 2,

"Guid": "5945167f7aef40aa9c8a7873432d2ebb",

"DateAdded": "2021-10-19T20:41:04",

"Message": "Strategy: full",

"Level": 0,

"ExecGuid": "c920da3f3cac4bb99f8cde2f8b2f231c"

},

{

"Sequence": 3,

"Guid": "5945167f7aef40aa9c8a7873432d2ebb",

"DateAdded": "2021-10-19T20:41:04",

"Message": "Source data extraction starting...",

"Level": 0,

"ExecGuid": "c920da3f3cac4bb99f8cde2f8b2f231c"

}

]

Returns the Job Status for a given execution id.

/job/status

Returns all available jobs defined under the agent including high level summary information.

This operation does not return Dynamic Jobs.

| Input Parameters | |

| string execguid | |

| Response | |

|

object SyncStatus show/hide

statusTemplate

|

|

/jobs/status?execguid=5d66ebae-42d8-4a0d-b0c7-c4f323a5bb63

{

"SyncStatus": {

"Guid": "9dce102385804200ac3ff1e015e8cd9a",

"ListenerType": "reader",

"ElapsedSQLTime": 1.1952159,

"ElapsedMapRecudeTime": 0.0,

"RecordCount": 1,

"RecordsAvailable": 1,

"DeleteCount": 0,

"ErrorMessage": "",

"LastTsPointer": null,

"LastDelTsPointer": null,

"Success": true,

"JobKey": "TestReader",

"ExecutionId": "1704305959599",

"PackageFile": "C:\\tmp\\datasync_reader\\MsgConsumers\\TestReader_1704305959599.eds",

"CreatedOn": "2024-03-01T18:19:14",

"IsConsumerDeamonError": false,

"RecordsDirectWritten": 0,

"RecordsDirectDeleted": 0,

"ExecGuid": "5d66ebae-42d8-4a0d-b0c7-c4f323a5bb63",

"ExecRunTime": "5.00",

"ExecStartTime": "2024-01-11T16:59:26",

"ExecStopTime": "2024-01-11T17:00:01",

}

}

Provides summary information regarding all the jobs defined in this agent.

/jobs/summary

Returns all available jobs defined under the agent including high level summary information.

This operation does not return Dynamic Jobs.

| Input Parameters | |

| (none) | |

| Response | |

| Guid | The unique Guid of the job |

| TargetGuid | The unique Guid of the target job (Direct Jobs only) |

| JobType | 1: Job Reader, 2: Job Writer |

| JobKey | The JobKey of the job |

| Active | When true, the job is active and can be executed (manually or through a schedule) |

| IsDirectMode | When true, indicates a Direct job (a reader and writer defined); when false, either a Reader or Writer is defined |

| Path | The job path where the intermidate Sync Files will be stored |

| SourceSystemName | The name of the source system (ex: MySQL) |

| TargetSystemName | The name of the target system (ex: AzEventHub) |

| LastExecutionId | The last Execution Id of the job |

| LastTsPointer | The last High Watermark value (if any defined) for the job reader |

| LastTsDelPointer | The last High Watermark value for Deleted records identification (if any defined) |

| UpsertColumns | Comma-separated list of key columns, if any. If not specified, the target will use an 'append-only' mode |

| TimestampCol | The High Watermark column name to use (if any defined) for the job reader |

| CreatedOn | The date/time the job was created |

| CronSchedule | The Cron schedule that defines the job frequency (if blank, the job runs manually or through a Trigger) |

| LastRunTime | The last date/time the job was executed |

| Status | The current status of the job (Ready, Running, Listening) |

| IsQueueingSource | When True, the job is a Message Consumer |

| IsHttpSource | When True, the job accesses an HTTP REST endpoint |

| IsDriveSource | When True, the job accesses files on a Local Drive, Cloud Drive, or FTP site |

| IsDBSource | When True, the job reads data from a database |

| TargetSystem | The type of target system where data is being sent (Direct Jobs or Job Writers) |

| IsConsumerDeamonError | When True, the error identified is the result of an underlying Messaging platform error |

| Success | When True, the last job finished successfully |

| ErrorMessage | When Success is False, contains the error message of the source system |

| DirectSuccess | When a Direct Job, determines if the target operation was successful |

| DirectErrorMessage | When DirectSuccess is false, contains the error message of the target system |

| TrackLastTs | When True, indicates that the job tracks High Watermark values |

| ConnectionString | The Connection String Key being used |

| SourceSystem | The system name used by the Job Reader |

| IsCDCSource | When true, the source system is a Change Data Capture stream |

/jobs/summary

[

{

"Guid": "2f7e8ec3258c456eb3ca9a9b6ef383b1",

"TargetGuid": "71e6b0ab03a14f2dba5020e924713ea7",

"JobType": 1,

"JobKey": "FILMS-RabbitMQ",

"Active": false,

"IsDirectMode": true,

"Path": "C:\\tmp\\films",

"SourceSystemName": "MySQL",

"TargetSystemName": "RabbitMQ",

"LastExecutionId": "1636649963336",

"LastTsPointer": "",

"LastTsDelPointer": "",

"UpsertColumns": "film_id",

"TimestampCol": "",

"CreatedOn": "2021-10-11T17:46:25",

"CronSchedule": "0 * * ? * *",

"LastRunTime": "2021-11-11T23:32:01",

"Status": "ready",

"IsQueueingSource": false,

"IsHttpSource": false,

"IsDriveSource": false,

"IsDBSource": true,

"TargetSystem": "MESSAGING",

"IsConsumerDeamonError": false,

"Success": true,

"ErrorMessage": "",

"DirectSuccess": false,

"DirectErrorMessage": null,

"TrackLastTs": false,

"ConnectionString": "mysql-sakila",

"SourceSystem": "MySQL",

"IsCDCSource": false

},

{

"Guid": "708f8f348a514dbbb394f95aef6f373e",

"TargetGuid": "a71ab5bd3d324708b7c122bfecfef0b5",

"JobType": 1,

"JobKey": "Kafka-MSMQ",

"Active": false,

"IsDirectMode": true,

"Path": "C:\\tmp",

"SourceSystemName": "Kafka",

"TargetSystemName": "MSMQ",

"LastExecutionId": "1636653527797",

"LastTsPointer": "{\"0\":3331}",

"LastTsDelPointer": "",

"UpsertColumns": "",

"TimestampCol": "",

"CreatedOn": "2021-10-14T18:51:14",

"CronSchedule": "",

"LastRunTime": "2021-12-09T20:49:07",

"Status": "ready",

"IsQueueingSource": true,

"IsHttpSource": false,

"IsDriveSource": false,

"IsDBSource": false,

"TargetSystem": "MESSAGING",

"IsConsumerDeamonError": false,

"Success": true,

"ErrorMessage": "",

"DirectSuccess": true,

"DirectErrorMessage": "",

"TrackLastTs": false,

"ConnectionString": "kafka-cloud",

"SourceSystem": "Kafka",

"IsCDCSource": false

}

]

Updates a Job Reader's Timestamp pointer used to determine the next starting time window for either read or delete operations.

The Timestamp window is used when a Timestamp Column is used in a Job Reader to select records from a known last High Water Mark,

or when a Job Reader uses a Change Data Capture stream to identify deleted records from a source system.

This operation does not support Dynamic Jobs.

/job/settspointer?guid=123456789&type=read

Updates the last Timestamp value of a Job Reader for either read or delete operations.

| Query Parameters | |

| string guid | The job identifier |

| string type | The type of window to modify (read, delete) |

| string val | The new value for the time window (optional); if empty or not provided, the Timestamp is set to an empty value. |

| Response | |

| OK | Success response code |

POST /job/settspointer?guid=123456789&type=read

OK

Starts a job immediately if it is enabled (active). If the job is already running, this operation does nothing.

/job/start?guid=123456789

Start a job immediately. If the job is already running, this command is ignored and returns OK. The job

must be enabled for this operation to work.

This operation does not support Dynamic Jobs.

| Query Parameters | |

| string guid | The job identifier |

| Response | |

| OK | Success response code |

POST /job/start?guid=c920da3f3cac4bb99f8cde2f8b2f231c

OK

Stops a job as soon as possible. If the job is not currently running, this operation does nothing. If the job is currently running, this operation sends cancellation requests to all pending operations and returns as soon as possible.

/job/stop?guid=123456789

Stops a job.

| Query Parameters | |

| string guid | The job identifier |

| Response | |

| OK | Success response code |

POST /job/stop?guid=c920da3f3cac4bb99f8cde2f8b2f231c

OK

© 2025 - Enzo Unified